It all started on the evening of my easter monday this year, I was laying down in bed, upset that I would have to go back to work on Tuesday when I receive a DM from a friend of mine containing a listing URL.

It's been a running joke that I have an addiction to buying random GPUs whenever given the opportunity to and it's well known I really really like Vega cards. She sent me this link jokingly but little did she know she was about to find out how much I really love these cards.

Knowing that the listing wouldn't last long, I instantly shot the seller a message asking to pick all of them up whenever he's free, if he wanted to even right now, to which he quickly agreed and sent me an address.

I didn't even think twice, I sprinted to the bathroom, started getting ready, informed my roommate of the horrible news (sorry again :/) and we jumped on the first train to get to the location with all the bags we could find home.

We ended up not actually going to the address due to not being able to get there, instead meeting at the train station which was a blessing in disguise.

To transport the cards home we split them between two reusable big convenience store bags as you can see below:

By far the worst part of this process was walking home, carrying the bags in and out of the train isn't very difficult but walking for extended distances isn't very fun.

As you can imagine testing 15 cards isn't very easy, I've done 4 on the first evening and I had originally began testing only after giving cards a basic clean and repaste, this turned out to be very time consuming so I started testing cards as they were since the previous owner said he did the same and all of them had issues of some sort.

As you already guessed, these cards probably come from a mining operation, as such that are all filthy to the point of feeling gooey to the touch, it's frankly very disgusting.

But that was very far from all my issues, the thermal paste on all of them essentially turned into concrete, removing it, especially from the dies without underfill is quite tricky since there's no real way to dissolve all that so you kind of have to scrape the paste off using a plastic card or something like that.

All the dust on the card is also a problem for the video outputs that weren't protected by their original dust covers, this was somewhat of a problem for testing because I'd often end up getting D6 errors which turned out to just be bad connections to my monitor.

I also need to admit that when cleaning the cards I made one of the already bad ones worse, as it now is stuck at 0.785v on the 1.8v line and as far as I can tell there's no shorts or anything of that sort.

To test every card I built up a process that goes a little something like this:

Plug a random gpu on my first PCIe slot, plug the card I want to test on my second slot, boot into a linux live iso that has access to a folder containing known good vbioses for the gigabyte v64 and v56, amdvbflash and flash the card with the v64 vbios, check if the old bios was a v56 one on the logs and if it was, reflash as v56.

Once flashed power off the system, unplug the other gpu and connect a display to the vega, boot into windows, run the 3dmark firestrike stress test for 2 cycles, if it gets through those turn off the system and start cleaning the card up, if it doesn't put the card on the pile of ones to look at later

Doing this I ended up with 7 working cards which after cleaning were retested for 20 cycles instead, out of the 7 working cards, 2 turned out to have little issues flashed as 64s so I rebenched them flashed as 56s and they passed the test just fine. (I still need to try lower power limit v64 vbioses as of writing).

I also ended up with 6 cards that either crash when getting to the windows desktop or during the stress test. (I still need to clean 4 of them, I haven't flashed some of them as 56s, I have plans to volt mod at least 1 of them to see if memory voltage can save them, among other tests)

Finally, I've ended up with 2 cards that don't boot at all, 1 of them I somehow messed up when cleaning and the other had never once posted or gotten detected. The latter is completely missing 1.8v and the former, as previously mentioned, outputs about 1v less than it should.

Nope, not so fast, over the last weekend I had the chance to properly work on the vegas some more, this lead me to cleaning a bunch more, looking at the broken broken ones and fixing a few more.

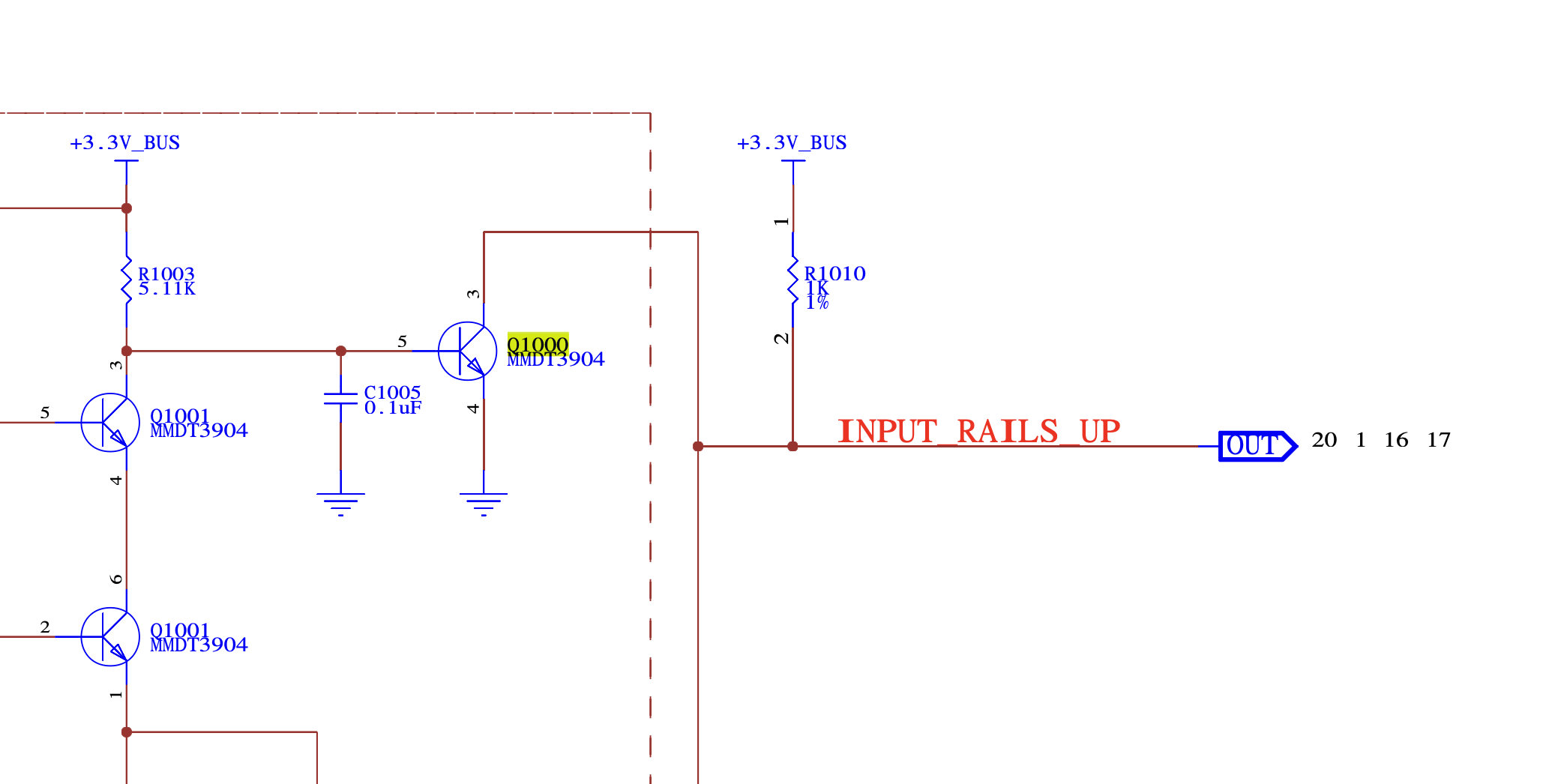

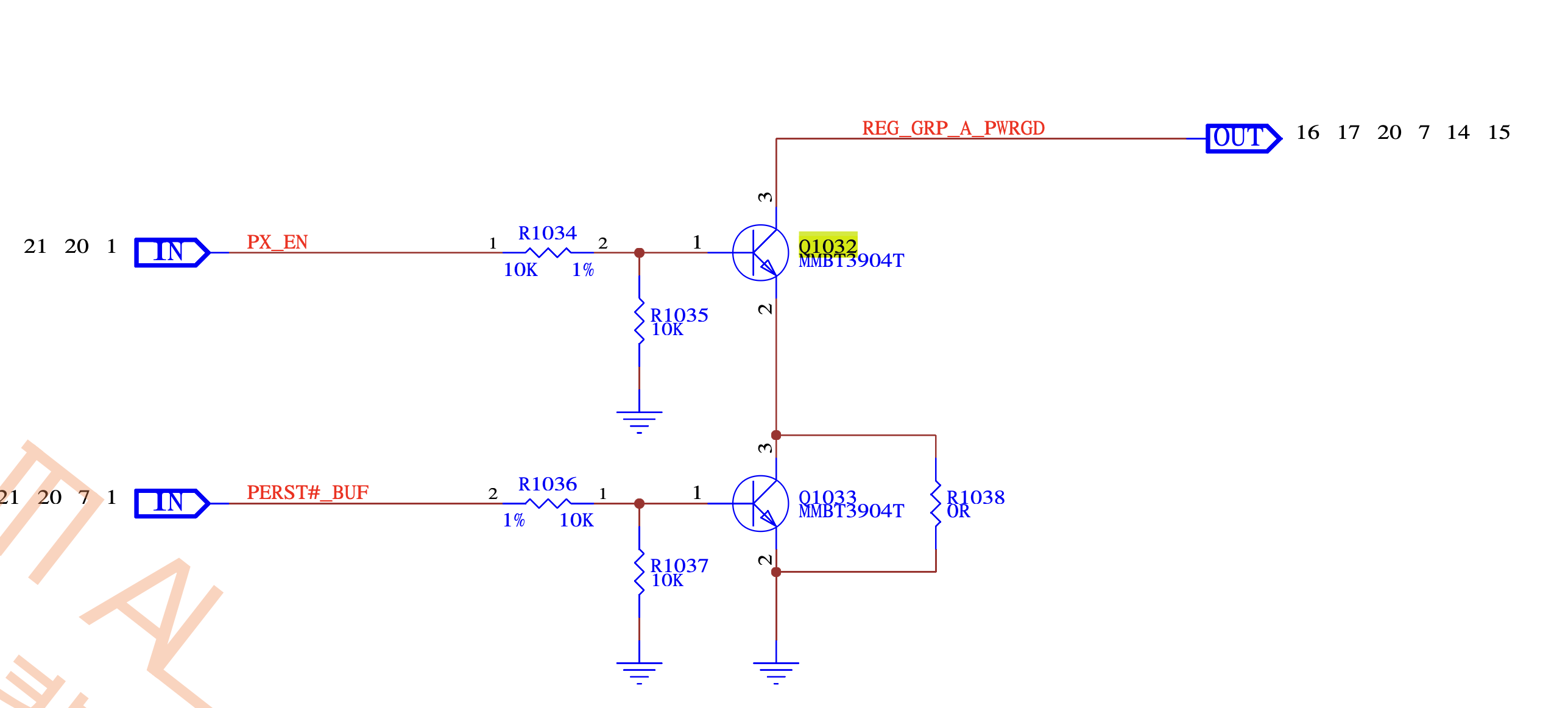

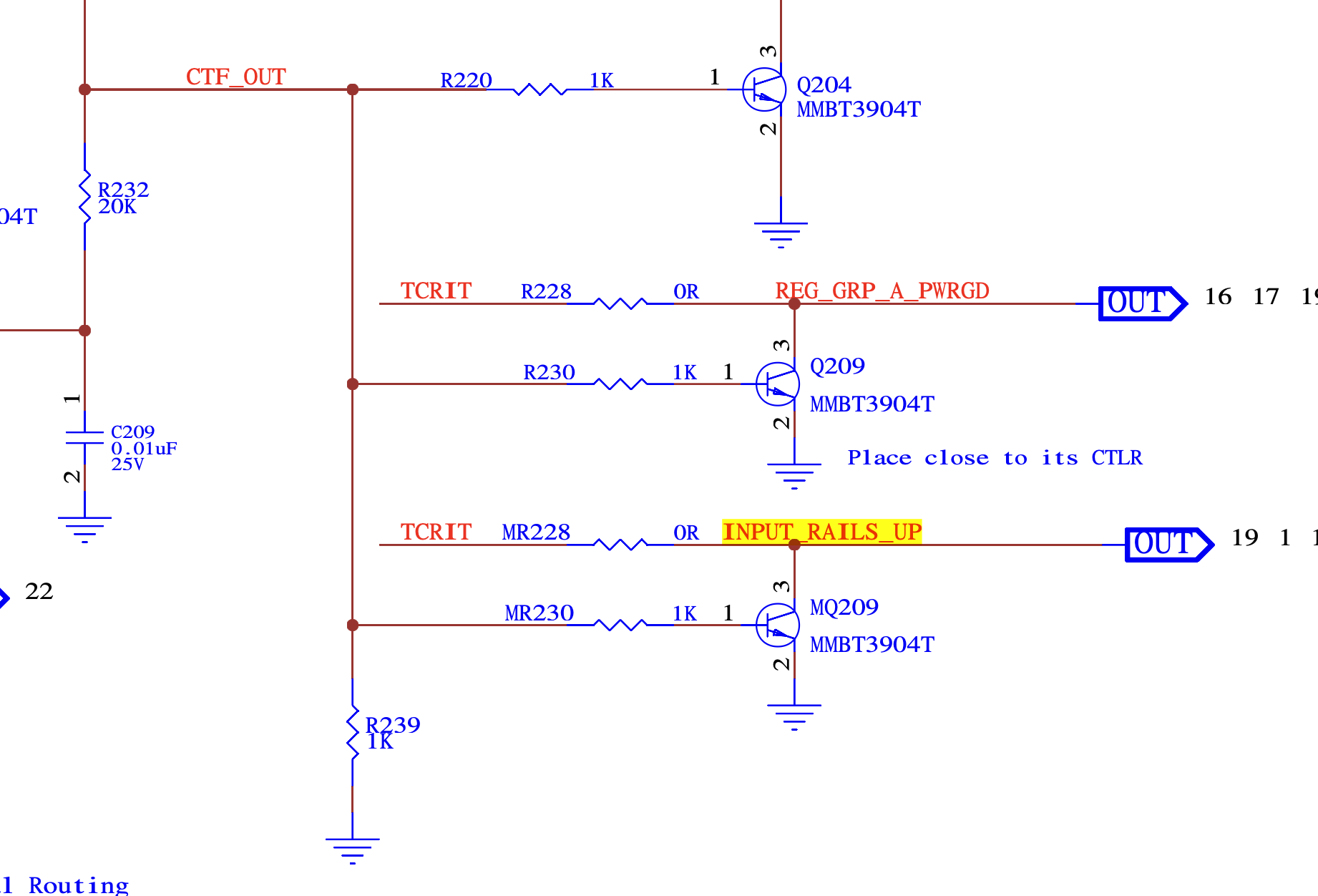

First, the one I've never seen turn on, this was the first broken one to get me interested because I knew that it'd have decent odds at working, so I went into detail, measured a bunch and realised something was pulling low the INPUT_RAILS_UP signal, which I solved by simply removing Q1000 and bridging R1010 (it is the end of the logic chain of possible asserts, this may have disabled the PROCHOT signal from turning off the cards or similar protections, I originally intended this as a temporary solution). After these modifications the 1.8v rail fired right up but the IR3537 still didn't come up, addressing that took removing R230 and Q1032.

After all this work the card finally came back to life, except for the fan control which seems to be stuck at 100% (at some later stage I want to check what monitoring software can tell me, I assume the fault is within the die but it might be an external probe or a passive component). Overall I am satisfied since this card will probably only be ran for benchmarking sessions where the fan is maxed out anyway.

Second, all the dead card were cleaned, repasted and rebenched, I found one that stabilised as a 64 and another one that is half stable as 56, both of these probably have silicon issues but looking at how poor the condition of the bulk capacitors is, I am confident in getting these up and running with some love and care.

Third, voltmoding, this wasn't very successful but it lead to me convincing my first test subject to start working again as 56 after undoing the voltmod. The voltmod itself taught me that more voltage on hbm2 (espeically over 1.35 is just going to make the memory more unstable, lowering the voltage requires a EVC2 that I don't have but it may help out a bit, also worth noting that all of my cards are 64s therefore run samsung hbm2).

Fourth, one of the two cards that ended up being flashed as 56 I repasted properly and while it is still stuttery (probably due to leaky silicon, it still benefits from running as V64)

All of this work effectively brought me to: 9 cards running as V64 (of which a couple are cursed eg. the stuttery one and the one with no fan control), 3 cards running as V56 (of which 2 are cursed due to barely making it as V56s), 1 has a dead 1.8V vrm and dead silicon, are so degrated no amount of love has managed to bring them back, so far (if I ever feel like it I'll try some mods to try and rescue these 2 and the other 2 V56s that barely make it).

While I don't plan on selling them I knew getting into it that if I could get 4 to work I would have paid less than market price for these so yes, I am in theoretical profit

I honestly don't know, while I picture a GPUPI run with many vegas on hwbot I don't think it's gonna happen soon as I am short on space and cash alongside having other priorities. However I would love to build a semi portable setup to show off at places like Congress since it's a pretty impressive amount of compute even these days.

Honestly not much, hopefully a lot about the PCB design of vega and some more about the silicon behaviors especially in the context of degradation but let's be real I bought them because of the big multiGPU stuff I can do with them :p.

here's your navigation buttons, as much as I find them silly.

Blog Index Main Page